The Quest for a Trusted AI Shopping Assistant

Imagine asking your personal AI assistant or a chatbot, "Find me the best noise-canceling headphones for under $200 that are built to last." But can you trust that it isn’t just the product with the highest affiliate kickback? Or the one backed by the biggest ad budget? Or the item a platform happens to need to clear from its warehouse?

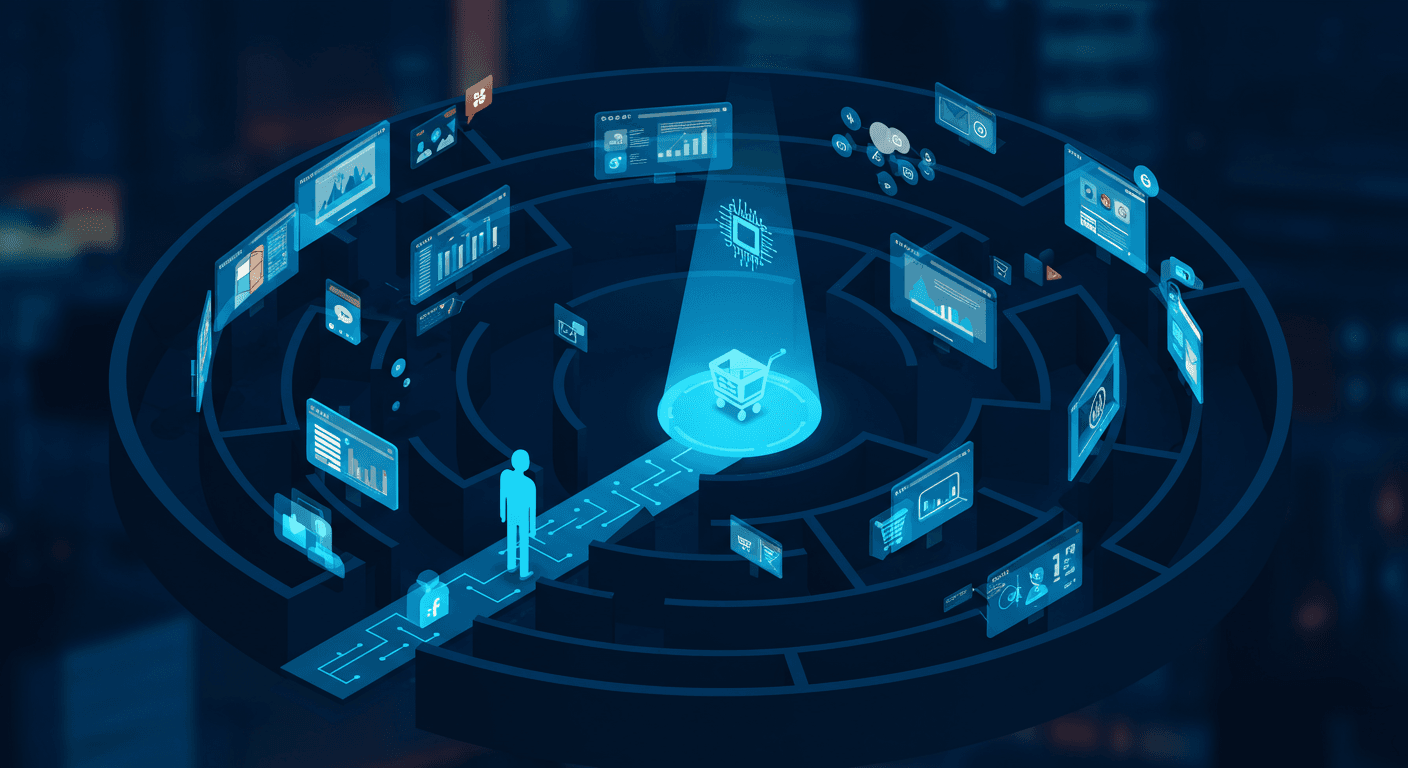

The age of the AI assistant is here. Its promise is profound: a system that operates as a trusted layer of digital commerce, working for the user rather than functioning as another marketing channel. Yet this quest is immediately confronted by a single, corrosive question that undermines every recommendation, every search result, and every curated feed we see today:

Who is this AI assistant working for?

Is this assistant your advocate? Tirelessly searching for the best possible outcome for you? Or is it functioning as a commercial proxy, quietly optimizing for the interests of unseen sponsors?

Answering this question is the first and most critical step on the journey to building an AI you can truly trust. Without it, no AI assistant — no matter how advanced — can credibly serve as the foundation of future commerce.

Part I: The Anatomy of Distrust

Trust in an AI shopping assistant is not a single metric. It rests on two intertwined pillars:

Intent: Do I believe the assistant is genuinely working for my best interests? Is its core motivation aligned with mine?

Competence: Do I believe the assistant is capable of finding the best answer? Is its data accurate, its understanding nuanced, and its logic sound?

The current digital ecosystem fails on both fronts. It is an architecture of distrust, built on a foundation of misaligned incentives and riddled with inaccuracies.

The Principal-Agent Problem: The Economics of Misaligned Intent

The primary threat to trust is not a flaw in technology but in economics. In theory, platforms act as agents on behalf of users, helping them find the right product. But because users do not pay for discovery, the true paymasters are sellers and advertisers. The result is the classic Principal–Agent Problem: the platform serves a different master than the one it is supposed to represent. This structural misalignment takes several forms:

The Marketplace Dilemma: Amazon is the best example for this. It operates both as the world’s largest marketplace and as a retailer of private-label goods– a conflict of interest. They are incentivized to promote their own products, such as AmazonBasics batteries, over higher-rated competitors, and uses internal data to identify profitable categories, launches competing products, and then privileges them in results.

The Pay-to-Play Ecosystem: In this eco-system, third-party sellers must invest heavily in Sponsored Product ads to dominate search results. Users often see the most profitable products for the platform. Amazon is an example.

The Discovery Tax: Google monetizes attention, shrinking organic results in favor of paid listings. Sponsored ads now crowd the search page, often indistinguishable from genuine recommendations. This “tax on relevance” forces businesses to pay for visibility while increasing user effort to distinguish ads from authentic results.

The Affiliate Conflict: Review platforms and influencers appear neutral but often rely on affiliate commissions. Their incentive is to recommend products with the highest payout, not the highest quality—quietly tipping decisions toward their own gain.

Now this problem is expanding to general-purpose AI assistants. Conversational systems like ChatGPT or Gemini build rapport with users and handle complex queries. But as they search for sustainable business models, they face the same economic drivers — hidden partnerships, preferential treatment of sellers, or embedded ads. These assistants risk becoming the most persuasive biased agents yet, embedding influence in the flow of natural conversation.

In ChatGPT’s case, the move toward in-chat commerce — where users can “Buy it in ChatGPT” and the platform takes a fee from merchants — though they claim that it is unbiased but it creates a direct incentive misalignment, if not today then tomorrow. The assistant is no longer just providing neutral recommendations; it is financially rewarded for pushing certain sellers or products. What looks like a seamless experience for the user risks becoming a subtle form of commercial steering.

The Competence Gap: The Problem of Unintentional Inaccuracy

Even if incentives were perfectly aligned, trust in an AI assistant would still be undermined by a second challenge: the unreliability of the data it must rely on. Failure often stems not just from bad intent, but also from flawed execution within a broken information ecosystem.

The Data Dilemma and the Limits of Real-Time Search

For an agent to be effective, it must have access to real-time product and pricing data across sellers. This is not simply a technical hurdle but a strategic one. Platforms like Google and Amazon have built enormous structured catalogs — Google alone lists over 1 billion products — yet they restrict access, creating an invisible moat. By design, this prevents aggregation and comparison, blocking fair competition and limiting transparency.

A naïve solution is to lean on real-time web search. But research shows this approach is slow, often inaccurate, and vulnerable to the very platforms it attempts to audit. Without structured, verifiable access, assistants cannot reliably deliver results.

Manipulated Social Proof: The Weaponization of Reviews

When data is uncertain, users turn to reviews. But reviews themselves are compromised. Fake ratings, paid testimonials, and inflated positivity systematically distort product quality. Platforms have little incentive to aggressively police fraud, since positive reviews increase conversions. And with AI tools, it is now easier than ever to mass produce reviews and responses, making a product look genuine. The result is a carefully managed gray zone: reviews not so false as to be obvious, but positive enough to drive purchases.

The Algorithmic Challenge: The Difficulty of Nuance

Even with better data, programming an assistant to interpret human requests is complex. Queries like “a good, cheap laptop for college” are nuanced. “Good” could mean long battery life, a comfortable keyboard, or processing power; “cheap” is relative. The assistant must translate vague language into precise trade-offs, a process prone to misunderstanding.

Even if an agent understands intent, it can still fail at personalization; overemphasizing one factor (e.g., price) while ignoring another (e.g., durability), or missing context altogether (such as when a purchase is a gift). Algorithms must encode priorities, but these programmed values may not match a user’s real preferences at the moment.

A Hierarchy of Challenges

Not all of these problems are equal. The deepest barrier is still the business model — a systemic issue that ensures misalignment by design. Next is the data moat: a vast and complex challenge, technically solvable but guarded by incumbents who restrict access. Finally, there is the algorithmic nuance problem: difficult but tractable once incentives and data integrity are addressed. With the right foundation, better personalization and recommendation are achievable through iteration and innovation.

Part II: A New Foundation for Trust

This hierarchy forces a critical realization: the quest to build a trusted agent has been focused on the wrong problem. We've been trying to build a better penthouse on a foundation of sand. The most difficult obstacles are not in the AI itself. Algorithmic nuance is a solvable problem. The true blockers are the foundational layers: a business model that forces incentive misalignment and a data ecosystem locked behind proprietary walls.

Then the question comes to this: what if we could solve the two hardest problems — the misaligned business model and the walled garden of data — once, in a way that is open, transparent, and available to all? By doing so, we create the necessary conditions for trust to emerge, making the subsequent challenge of distribution one worth solving.

Decoupling the Agent from the System: The Protocol Approach

Think of how email works. The reason you can switch from Gmail to Outlook to a privacy-focused client like ProtonMail is because they all rely on an open, universal protocol: SMTP. The protocol handles the fundamental mechanics of sending and receiving messages. This creates a competitive, innovative ecosystem of email clients (the "assistant" layer). If a user doesn't like their client, they can switch without losing their identity or their data.

This is the path forward for AI assistant–driven commerce: a protocol-based approach that separates the foundational layer (incentives and data) from the application layer (AI assistant). By solving misaligned incentives and data access at the protocol level, we allow the assistant layer — the intelligent interface that interprets user intent — to flourish without inheriting systemic flaws.

Solving these two hard problems transforms the third problem — building the assistant — into a world of user optionality. Developers can create diverse assistants tailored to different values or use cases. Users can select the one that best fits their needs, confident that every agent built on the protocol is structurally aligned to serve them.

Realigning the Engine: A New Economic Model for Trust

Solving the economic problem means rebuilding how intent to product discovery and monetization interact. Today, this matching is determined in opaque black boxes, where advertiser bids distort outcomes and erode trust.

The Intents Protocol offers a different model. By operating on an unbiased and enriched product catalog and open logic, it makes product discovery verifiable, transparent, and user-defined. “Best” is no longer dictated by what is most profitable for a platform, but by what the user values in that moment — whether lowest price, highest durability, fastest delivery, or ethical sourcing.

The outcome is clear: discovery that can be trusted, audited, and aligned with the user, not the intermediary.

This verifiable discovery algorithm creates a trusted "relevance context" for every query. Within this context, the economic model can thrive without corrupting the results:

A Trusted Consideration Set is Established: The protocol first uses its verifiable algorithm to determine the set of products that are genuinely relevant to the user's request. This process is shielded from direct financial influence.

Sellers Compete Within the Context: Sellers whose products are included in this trusted set can then bid for priority. Their financial bids don't change the set of relevant products; they only influence the ranking within that pre-approved set. They are bidding for the user's attention in a fair contest, where the merit of their product has already been verified.

This structure reshapes the system:

Users trust the process because every recommendation is rooted in objective facts. The rationale behind results is transparent and auditable.

Merit is rewarded because inferior products cannot buy their way in. Entry depends on quality, not spend.

Sellers still compete economically, but their payments are channeled productively within trusted guardrails. The model is no longer pay-to-play; it is pay-to-perform.

By tying monetization to discovery within a verifiable context, the protocol resolves the Principal–Agent problem. The system’s profitability flows directly from user satisfaction. Agents and platforms are finally serving the right master—the user—because that is the only way to succeed.

The result is a sustainable economic engine powered by trust itself.

The Quest Begins

The vision we have laid out is not a simple one. The challenges of a broken business model and a fragmented data ecosystem are the most difficult and deeply entrenched problems of the digital age. Solving them is a monumental task.

But it is a task that must be undertaken.

This is the mission we are embarking upon. Intents Protocol is our answer to this challenge. It is the open, foundational layer designed to solve the problems of incentive alignment and verifiable relevance once and for all. And to prove it is possible, we are building the first shopping assistant on top of it: Inomy.

The quest for an agent you can trust is a long and arduous one. It's a journey to build a system that can finally, and verifiably, answer the question, "Who is it working for?" with a single, unambiguous answer: "You."