The original promise of online advertising was simple: it funded the open web while helping people discover new products and services. As we discussed earlier in our discussion on why the internet needs an open monetization layer, that balance has fractured. Over time, keyword auctions and revenue-driven optimization shifted the system away from user value, leaving it vulnerable to fraud, manipulation, and declining trust.

At the same time, AI is reshaping digital commerce. Large language models can now take on the “decision” work — researching, comparing, and filtering — that users once shouldered alone. This creates a paradox. Advertising remains essential, but in an AI-driven world the old advertising model faces a dual threat. It is becoming ineffective, as AI assistants summarize information, and dangerously influential if commercial bias is embedded directly into their answers.

This is not a challenge that can be solved by minor adjustments to keyword auctions. The shift demands a structural rethink of how intent connects to commercial outcomes. Early experiments in AI advertising have exposed the limitations of applying old models to new technology. A different approach is needed: one that aligns incentives, protects trust, and makes intent the basis of discovery.

AI Has Changed the Question: Why The Keyword Model Is Obsolete

For over two decades, digital advertising has been built on a simple mechanic: the keyword auction and links. You typed “best running shoes,” and Google returned a ranked page of links — some organic, some paid. In that model, the link itself was the answer. Clicking through was how discovery worked.

Over time, though, the model bent under pressure. Ads began appearing too early or in the wrong places, optimized more for revenue than for relevance. Keywords, once a rough stand-in for intent, became blunt instruments — easy to game, stripped of nuance, and riddled with fraud, inflated clicks, and user fatigue.

Now, with AI assistants reshaping how people ask questions, the “link-as-answer” framework is unraveling. Instead of links to chase, users expect clear, direct solutions in the flow of conversation.

Large language models no longer return lists of links; they deliver synthesized answers. When you ask, “What’s the best laptop for photo editing under $1,500?” you are not looking to be fed ten links of ads and blogs and be asked to go and figure out yourself; you want a clear, direct solution. The current links model is not supporting this discovery. So, we need a new form of advertising.

Early LLM Advertising: "Lipstick on a Familiar Pig"?

As links give way to direct answers, advertisers and platforms are scrambling to retrofit monetization into AI chat. The tactics vary, but the logic is familiar — repackage old ad mechanics for a conversational interface. Some common tactics include:

Embed ads directly into answers: Microsoft’s Copilot experiments show the most proactive form of this. Ads appear above, below, and even within AI-generated responses. And formats like “Ad Voice” narrate the sponsor’s message as part of the answer itself. CTRs rise, but so does the risk: when the line between response and promotion blurs, trust erodes.

Sponsor the next question: Perplexity’s model pushes paid prompts into follow-up queries. Advertisers don’t control the content of the answer, only the nudge toward a new direction. It’s transparent by design, but fragile in practice. Users inevitably wonder: why that prompt and not another? The neutrality of the whole experience comes under suspicion.

Insert ads into generative summaries: Google’s AI Overviews bring search’s auction model into the answer itself. Ads can appear inside a summary if deemed contextually relevant. The tactic defends Google’s ad empire, but it also raises the oldest question in advertising: am I looking at the “best” answer, or the “best-paid” one? Google is also experimenting with the LLM token auction model.

Turn the assistant into a store: OpenAI, under pressure to monetize, is exploring in-chat commerce. Instead of pointing users elsewhere, ChatGPT could become the checkout counter, recommending and transacting within the same interface. This cuts out friction, but also shifts the assistant’s role from a neutral guide to a marketplace operator, with all the incentive conflicts that implies.

Additionally, Generative Engine Optimization (GEO) is emerging as the organic counterpart to AI chatbot ads. In this case, instead of bidding for placement, brands optimize their content to be ingested, cited, and surfaced by LLMs. That means writing with clarity, structured data, and authority signals so AI prefers it when constructing answers. In effect, GEO is the new SEO — only the competition isn’t for clicks, but for inclusion inside the machine’s output.

Recent research shows how subtle, algorithmically derived “strategic text sequences” can manipulate LLMs to favor particular products, even when there's no overt ad placement. If influence becomes a game of hidden textual nudges, the integrity of AI-powered answers erodes fast.

The throughline across these tactics is clear: they prioritize influence over alignment. Ads creep into answers, prompts are nudged, assistants risk becoming storefronts, and content is shaped to influence AI. . Each buys time, but none resolves the fundamental mismatch: users want discovery and transparency; sellers want qualified intents and platforms want monetization..

Why Current Fixes Fall Short

The experiments from Microsoft, Google, and Perplexity reveal a fragile truth: shifting from keyword ads to conversational commerce is a profound change. The current ad tactics looks innovative on the surface but often feels like patching old logic onto new technology.

1. The Answer–Ad Mismatch and the Trust Deficit

In search-led discovery, ads, in the form of links, were sometimes the answer. If you typed “best credit card for travel,” a sponsored result might genuinely be a top choice.

In AI-led chats, the “answer” should be objective, distilled, and personalized. Dropping in an ad there is a detour right when clarity seems within reach. Imagine asking for the best laptop for photo editing, when the AI recommends MacBook Air 13 but the banner ad on the side is pushing Dell XPS 13. The risk isn’t mere annoyance or confusion. It’s trust. If people begin to suspect their AI helper serves advertisers more than them, the whole experience collapses.

This concern is very real. Take Perplexity’s “sponsored questions",” for example. The idea is that advertisers pay to suggest follow-ups, but the AI’s answer stays “neutral.” On paper, it’s a balance. But in practice, it seems dubious. In this model, only the question is sponsored, the answers are not, which leaves advertisers with little control and murky ROI, while users are left questioning why a certain brand was surfaced at all.

Google faces a similar puzzle with its AI Overviews. Ads embedded in summaries might be relevant, but when the boundary between sponsored and organic discovery blurs, users wonder, “Am I seeing the best answer or the most profitable one?” This uncertainty undermines the trust that makes AI assistants appealing.

2. Brand Safety and Hallucinations

Even if trust holds, brands face another challenge: hallucinations. Large language models are not fact-checkers, they’re confident storytellers. Real cases of LLMs hallucinating and sharing misinformation already exist – Microsoft’s AI travel guide also came under flak for recommending a food bank as a tourist attraction. So when LLMs are tasked with creating ad copy or inserting sponsored recommendations, errors are inevitable.

Scaling this risk in advertising could be disastrous. AI-generated ads misstating warranties, fabricating reviews, or giving nonsensical advice could damage reputations or worse, invite legal trouble. Traditional brand safety tools — keyword blocks, placement filters — can’t catch errors an AI invents within the ad itself.

3. The Privacy Problem

And then, there’s privacy. Search history might feel transactional but chat history feels deeply personal. When users discover their private conversations with AI assistants drive ad targeting, the backlash will be swift. Even if the ads have value, research confirms that people rate systems manipulative when chat data fuels ads.

Simply put: the current fixes — integrated ads, sponsored prompts, AI-overviews — fail to correct the fundamental mismatch. They add risk more than trust, noise more than meaning. And the solution cannot be patchwork. It must be structural.

Intent-Based Bidding: A Structural Alternative

As we have already established, for two decades, keyword auctions were a clever workaround for a world where intent could only be expressed in fragments: a few words typed into a search box. But now users can state what they want in full sentences, and AI can translate those sentences into structured, verifiable data. Once intent is that clear, why keep guessing with keywords?

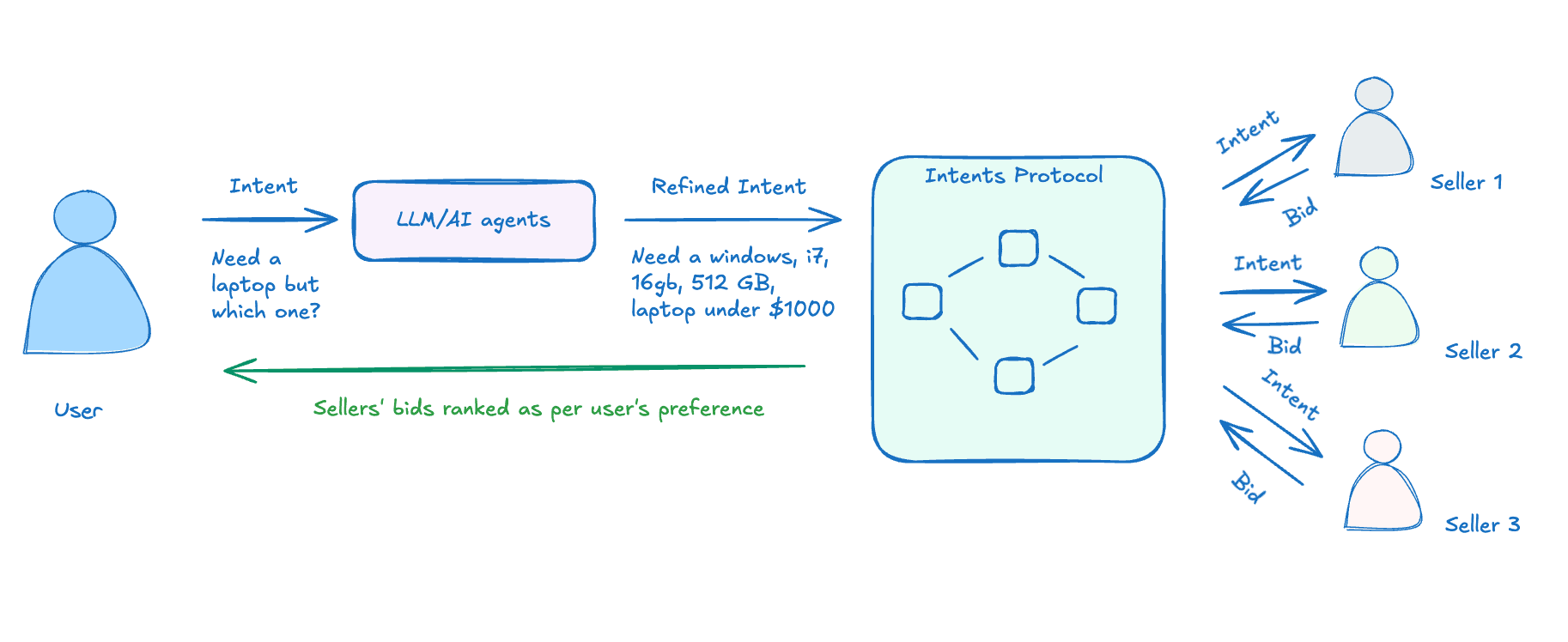

This is where intent-based bidding offers a structural solution. Instead of advertisers competing for vague keyword matches or LLM platforms weaving ads into “neutral” answers, sellers compete directly to fulfill a user’s verified, anonymized intent. It’s commerce aligned with the way people now interact with AI. That’s what we are building with the Intents Protocol.

Here’s how it works:

Intent becomes the unit of competition. When a user asks for “noise-canceling headphones under $300, foldable, with at least 10 hours of battery life for air travel,” that request is captured as precise, machine-readable data. No ambiguity, no guesswork.

Sellers respond to the exact need. Rather than paying for the chance to appear in a search result, sellers bid directly to fulfill that intent. Competitive dynamics push them to put forward their best offers — sharper pricing, better bundles, faster delivery. The winning offer is not the biggest spender but the best fit for the user.

How Inomy processes intents

And this is why it matters:

The ad is the answer: In this model, there’s no disconnect between commercial and informational layers. The “ad” is the offer that directly satisfies the request. Instead of distracting from the answer, it is the answer.

Users stay in control: Transparency allows users to shape how offers are ranked — whether they want the cheapest option, the fastest delivery, or the strongest warranty. The platform no longer decides in the dark; the user does.

Incentives finally align: In keyword auctions, platforms extracted most of the value while users became the product. Here, users can even share in the value their intent creates. What benefits the user also drives the market.

Built for the agentic future: AI assistants won’t sift through banners or ten blue links. They’ll act on structured intents and transact deterministically. Intent-based bidding is designed for that world — one where agents shop on your behalf.

Open by design: If intent markets are captured by a single platform, we recreate the same gatekeepers we’re trying to escape. A neutral protocol, like the Intents Protocol, ensures fairness and keeps the rails open for sellers, developers, and users alike.

The result is a healthier balance between discovery and fulfillment. Instead of advertising feeling like noise, it becomes the mechanism that delivers the most relevant solution to the user. In this model, everyone wins: users get clarity and control, sellers compete on value rather than spend, and the ecosystem evolves toward trust and efficiency.

The Future of Commerce Is User-Owned

This shift to intent-based bidding is bigger than refining ad formats. It’s a full inversion of the digital marketplace. Users stop chasing sellers through clicks; rather sellers compete for user intent. Essentially, with the Intents Protocol, opaque algorithms give way to transparent protocols.

And advertising doesn’t disappear here, it just becomes genuinely useful. Sellers still pay to reach buyers. But buyers control the process, AI agents act faithfully on their behalf, and value flows by linking verified intent with the best offers.

This is the future Inomy and the Intents Protocol are building: an open, user-owned system where ads feel less like noise and more like helpful guidance. In an AI-driven economy, it’s the only path that truly makes sense.